Relooking Attention Models, SHA-RNN Overview, gMLP Briefing and Measuring Efficacy of Language Models through Perplexity

Posted September 11, 2021 by Gowri Shankar ‐ 10 min read

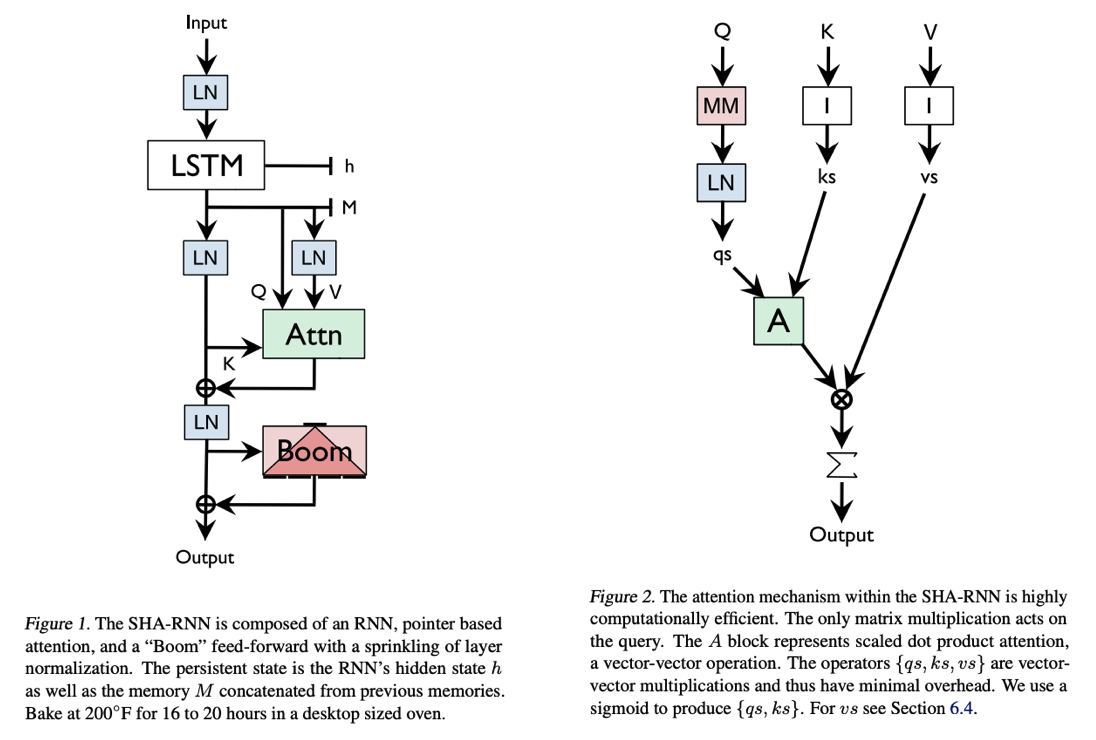

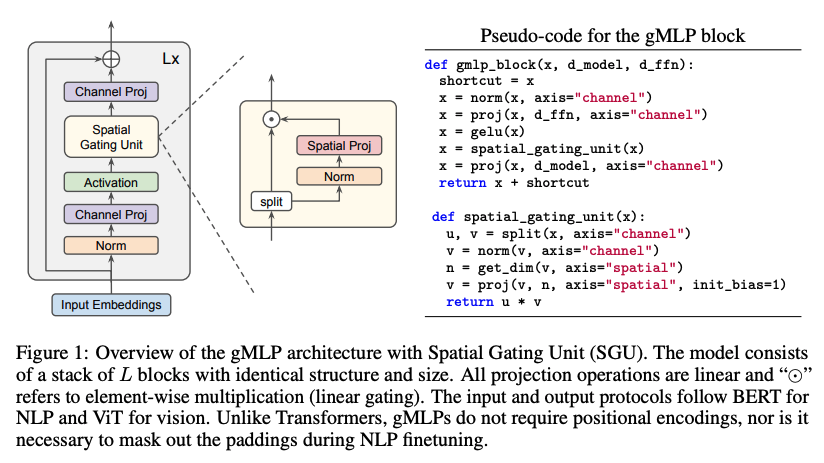

Satire and sarcasm are seldom seen in scientific writing but this is an era of memes and trolls where complex concepts are conveyed through highly comprehensible mediums(videos, animations, etc). When it comes to being critical(without hurting) about a concept or a character, sarcasm is taken as a medium in the literary renditions but seldom do we see them in the scholarly scriptures. Such sui generis is convivial and fervent for the patrons - Stephen Merity's 2019 paper titled Single Headed Attention RNN: Stop Thinking With Your Head(SHA-RNN) is one such scholarly writing where he is critical about today's (leading) approaches in language modeling especially our obsession towards the Attention Models without demonstrating outrage or distress. His paper is lucid and takes us back to celebrate the glory of yesteryear's multi-layer perceptrons. A more recent paper(Jun 2021) from Google titled Pay Attention to MLPs(gMLP) periphrastically confirms Stephen's claims with substantial empirical proof.

This post has two sections where we discuss Stephen Merity’s avowal of the foibles in Attention Models first and then take this opportunity to understand mathematical intuition behind Perplexity - the measuring metrics for Language Modeling and Language Understanding.

We discussed the simplicity of Attention Models in the past under the grounds of models that are inspired by human cognition, Please refer to the last post here…

The leading approaches in language modeling are

all obsessed with TV shows of my youth - namely

Transformers and Sesame Street. Transformers

this, Transformers that, and over here a bonfire

worth of GPU-TPU-neuromorphic wafer scale silicon.

- Stephen Merity

This post is a commentary over Stephen Merity’s 2019 Paper titled, Single Headed Attention RNN: Stop Thinking With Your Head(SHA-RNN)

Objectives

The objective of this post is to have a walk-through of Stephen Merity’s 2019 paper title Single Headed Attention RNN: Stop Thinking With Your Head(SHA-RNN) at a high level to understand the frailty of Attention Models and a briefing of gMLP models from Google. Subsequently, study the mathematical intuition behind Perplexity measure, extensively used for measuring the efficacy of Language Models.

Introduction

The idea of Transformers is not new, they are there even before the arrival of the seminal paper Attention is All You Need from Google. Inductive biases, i.e. bringing in past knowledge into the interactions is an inspiration taken from biological systems(okay, I understand your frown, I meant human cognitive systems). Past knowledge inclusion is done using the aggregation of spatial information across tokens through multi-head attention blocks. Attention Models combine a recurrent free architecture that computes the representation of individual tokens in parallel and dynamically parameterizes the attention blocks. The key question is, Do we need the dynamic parameterization - asked by both Stephen Merity and Liu, Dai et al from Google Brain in their Pay Attention to MLPs(gMLP) paper.

Language is one key aspect that shaped the process of human evolution, we are what we are because we argue, gossip, convey our thoughts, seek consensus, make opinions, craft stone inscriptions, etc. Language is the medium through which we acquire knowledge from rich sources. A meaningful conversation among human beings may or may not span for a long time but the underlying motive is not to complete it in the form of sentences or paragraphs. i.e. Today’s Language Models are awkwardly inferior by their intent because their task is to predict the $(n+1)^{th}$ token in a sequence given $n$ preceding tokens in desperation. The urgency in finishing the sentence is completely contradictory to the human language processing and understanding but some sort of universal approximation of intricacies of the text corpus fed for training.

When we talk about training models, independent researchers like Stephen Merity(or me) are at the mercy of free compute credits that we get or Colab Pro to train our models. It is practically impossible to get clusters of computing and memory resources - In such scenarios, a transformer model is a far-fetched one. With the resources of Colab Pro, I could run a maximum of 2 heads for a relatively small dataset when I was building a time series predictive model.

We need a Moore’s Law for machine learning that encourages

a minicomputer future, not a mainframe one.

- Stephen Merity

Multi-Head Attention

We have to be honest with ourselves, have we fully understood what is going on in the multi-head attention? i.e. Do we have the intuitive fix in our brains something that is beyond the following transcendental equation - I confess, I don’t have one but the equation is a swag.

$$MultiHead{(Q,K,V)}) = [H_1, \cdots, H_{m_H}]W_H$$ $$i.e.$$ $$H_h = Attention(QW^{(h)}_Q, KW^{(h)}_K, VW^{(h)}_V) \tag{Multi-Head Attention}$$

Where,

- $W^{(h)}K \in \mathbb{R}^{d{model} x d_{attn}}$ is head specific weights for keys

- $W^{(h)}Q \in \mathbb{R}^{d{model} x d_{attn}}$ is head specific weights for queries

- $W^{(h)}V \in \mathbb{R}^{d{model} x d_{V}}$ is head specific weights for values

We are trying to map sequences to sequences to predict the $i^{th}$ element of the right side sequence. In this scenario, we have to prevent leftward information flow in the decoder to preserve auto-regressive property. Hence the right elements are masked out before applying the softmax.

Compute Complexity

The $Q\odot K^T$ operation looks computationally quite daunting because we are multiplying every element of the matrix that lead to $O(n^2.d)$ complexity. Where $n$ is the length of the sequence and $d$ is the representation dimension. Meanwhile, an attention model does not have any recurrent or convolution layers, makes it a mere positional connection with a constant number of sequentially executed operations.

The attention mechanisms as used in many Transformer inspired

architectures assume no sequentiality in construction

and many complex attention heads - dozens per layer. One

might take issue with this as simple is better than complex.

- Stephen Merity

It is a rare event where the length of the sequence$(n)$ exceeds the representation dimension$(d)$, ensures the total computational complexity of the attention mechanism is far lesser than a recurrent model with a per layer complexity leads to $O(n.d^2)$. Complexity further reduces when the self-attention mechanism restricts the size of the query with a focused set of input tokens. However, we employ a huge value for $n$ when we train the state-of-the-art algorithms of the GPT or BERT order.

I have a serious concern when our models demand clusters of compute nodes and gazillion parameters to remember and process - the issue starts from individual's(or an organization) affordability and ends at carbon footprint and environmental impacts. Hence, if there is a better approach - our research should be directed towards that one and there comes the idea of SHA-RNN and gMLP.

An Alternate Approach

Stephen asks, have we made a slightly different name and direction, the field of NLP and NLU would have evolved towards minimal computation models and proposes the Single Headed Attention RNN(SHA-RNN) model that converges on a lone GPU system on envik8 dataset.

The model consists of a trainable embedding layer, one

or more layers of a stacked single head attention recurrent

neural network (SHA-RNN), and a softmax classifier. The

embedding and softmax classifier utilize tied weights.

The model uses a single head of attention, which is more

along the lines of the Continuous Cache and a modified

feedforward layer similar to that in a Transformer, which I

have referred to internally as a Boom layer.

- Stephen Merity

The Boom layer is similar to the feed-forward layer in Transformers architecture, the layer takes a static learned vector of the form $v \in \mathbb{R}^H$ ($H$ is the model’s dimensionality) and uses a matrix multiplication with $GeLU$ activation to produce $u \in \mathbb{R}^{N \times H}$. Where weight $w \in \mathbb{R}^H$ is obtained by summing $u$ along the row.

SHA-RNN Details

The static learned vector shifts during the training period and is fixed in production, they interact with dynamically produced vectors in hidden states, gating mechanisms, etc for convergence. Instead of dynamic production of the vectors, if we have fixed inputs then learning the optimal static vector could be achieved by optimizing the entire dataset. The known fact about deep learning systems is, we do not have fixed inputs. This idea is quite intriguing but making quite a lot of sense - How Stephen achieves this has to be examined from his code.

By over-parameterizing the static learned vectors we can

provide an aspect of history to these low dimensional entities.

Rather than modifying the values destructively the

model now has the option to change the eventual value by

shifting the weights within the model, the initial vector, or

the sigmoid gates.

- Stephen Merity

Let us assume there are 2 values to be optimized, $z$ the zero vector and $m$ the mask - learn the correct sigmoid gates $g$ for an output $m$. Then the model will oscillate between the zero vector and the mask vector without a need for relearning. Now the history is stored in $m$ and protected by the model only changing $g$.

$$vs = \sigma(W^fv) \odot tanh(W^cv) \tag{Overparameterized Component}$$ where,

- $f \in \mathbb{R}^H$ is forget gate produced by the learned vector $v$

- $c \in \mathbb{R}^H$ is candidate and

- Once the training is complete, these parameters are replaced with $vs$ result in a static output of the above equation - thus these parameters exist only during training and not in production

gMLP

gMLP proposes an MLP based alternative for Transformers without self-attention - having channel projections and spatial projections with static parameterization. Stephen with his limited resources proved a model outperforms Transformers for language processing, gMLP team extend the same idea of static parameterization to image classification as well and obtained strong results(their words) on ImageNet and much more.

Measuring Efficacy of a Language Model

A language model renders the probability distribution over the text and perplexity is the measure through which we evaluate the efficacy of the model. To understand perplexity well, we have to understand entropy - entropy is measured in bits and it is the average number of bits that we need to encode the information. We have studied entropy in detail, please refer the entropy post here.

Shannon’s Entropy

Shannon proved the following fact…

Suppose $H(p_1, \cdots, p_N)$ is a function, then for all N and all probability vectors $(p_1, \cdots, p_N)$

$$H(p_1, p_2, p_3, \cdots, p_N) = - \sum_{i=1}^n p_i log_2 q_i$$ $$\text{let me rewrite the equation with t to represent the tokens}$$ $$H(t) = - \sum_{x=1}^N p(t) log_2 p(t)$$

Where, $N$ is the total number of words for a language model and $q$ is our prediction

Conditional Probability of a Language Model

The popular deep learning language models are factorized over a set of tokens, i.e we have to compute the probability distribution as a product of conditional probabilities i.e

$$p(\text{transformers are expensive}) = p(\text{transformers})p(\text{are|transformers})p(\text{expensive}|\text{transformers are}) = 1$$ $$or$$ $$p(t)p(r|t)p(a|tr)p(n|tra) \cdots p(e|\text{transformers are expensiv}) = 1$$

with these intuitions in place, we can easily derive an acceptable mathematical framework for calculating the efficacy of a language model and call it perplexity.

Perplexity

If entropy $H(x)$ is the average number of bits to encode the information what could be the total amount of all possible information - It’s exponent.

Perplexity is, the weighted average number of choices a random variable has.

For e.g, if the average sentence in the test set could be coded in 100 bits,

the model perplexity is 2^100 per sentence.

- Aerin Kim

$$Perplexity = P = 2^{entropy} = 2^{\text{avg number of bits}}$$ $$P = 2^{H(x)} = 2^{- \sum_{x=1}^N p(t) log_2 q(t)}$$

let us assume all words have equal frequency, i.e probability of $(n+1)^{th}$ word is equal to any $i^{th}$ word in the sequence, $$p(x) = \frac{1}{N}$$ $$then$$ $$P(t_1, \cdots, t_N) = e^{- \sum_{t=1}^N \frac{1}{N} ln q(t)}$$ $$i.e$$ $$\Large P(t_1, \cdots, t_N) = e^{- \frac{1}{N} \sum_{t=1}^N ln q(t_i|t_1, \cdots, t_{i-1})}$$

$$P(t_1, \cdots, t_N) = q(t_1)^{- \frac{1}{N}}q(t_2)^{- \frac{1}{N}}q(t_3)^{- \frac{1}{N}} \cdots q(t_N)^{- \frac{1}{N}}$$

$$P(t_1, \cdots, t_N) = \Pi_{i=1}^N q(t_i)^{- \frac{1}{N}}$$

$$\Large P(t_1, \cdots, t_N) = \sqrt[N]{\frac{1}{q(t_1)q(t_2)q(t_3), \cdots, q(t_N)}} \tag{Perplexity}$$

The exponent part is nothing but scaled log probability of the text, a good language model puts high probability on real text that leads to lower perplexity.

Conclusion

I am confused and anxious, as a community, the approach and direction we take in achieving human-like intelligent agents on a silicon wafer are mostly inspired by the human cognitive system. Then, how we ended up knitting clusters of compute nodes to comprehend a few sentences and images, Aint we are driven by the intriguing efficiency and power consumption of the human brain to solve complex problems - A human brain consumes just 20W of power to create cities and civilization. Stephen Merity’s paper is a thought-provoking and good read. In this post, we walked through his paper at a high level, I swear I will get full confidence only when I code his hypothesis and see how it works but I trust him. Further, I did not find anything interesting in the gMLP paper that was not mentioned in Stephen’s seminal paper that shows us light on the foibles of Transformers. Meanwhile, I am glad gMLP authors confirm Stephen’s approach is superior despite the fact they did not give credits or attributions in their scholarly work.

References

- Single Headed Attention RNN: Stop Thinking With Your Head by Stephen Merity, 2019

- Attention Is All You Need Vaswani et al, 2017

- Pay Attention to MLPs Liu, Dai. et al, 2021

- Hutter Prize from Wikipedia

- Perplexity by Stephen Chestnut, 2019

- Perplexity Intuition (and its derivation) by Aerin Kim, 2018